For example, in a face recognizer a neuron might respond strongly to an image that has an eye or a nose - but notice there is no reason that the features should correspond to the neat labels that humans use. Whatever it is that maximizes the neuron's output is assumed to be the feature that it responds to. The standard way of finding out if this is the case is to take a particular neuron and find the set of inputs that makes it produce a maximal output. At the very least it was supposed that in the last layer each neuron would learn some significant and usually meaningful feature.

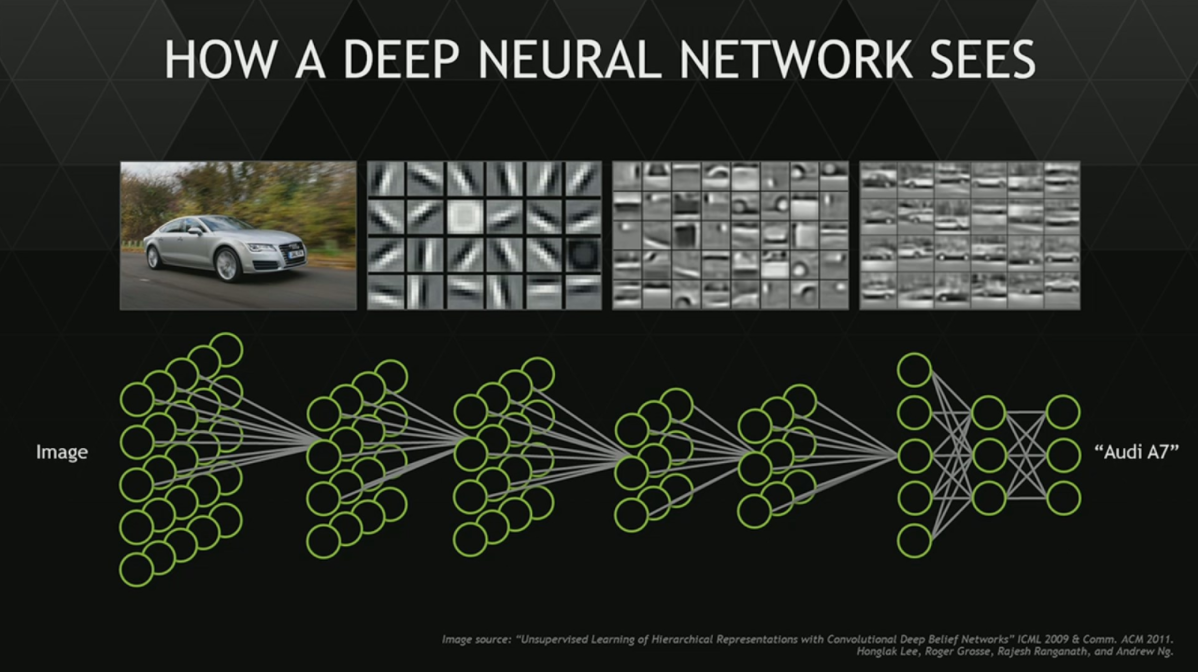

In a multi-layer network it has long been thought that neurons in each level learned useful features for the next level. The first concerns the way that we had long assumed that neural networks organized data. So if you are in a hurry skip down the page. I'm going to tell you about both, but it is the second that is the most amazing. Update: Also see - The Deep Flaw In All Neural Networks.Ī recent paper "Intriguing properties of neural networks" by Christian Szegedy, Wojciech Zaremba, Ilya Sutskever, Joan Bruna, Dumitru Erhan, Ian Goodfellow and Rob Fergus, a team that includes authors from Google's deep learning research project outlines two pieces of news about the way neural networks behave that run counter to what we believed - and one of them is frankly astonishing. The Flaw Lurking In Every Deep Neural NetĪ recent paper with an innocent sounding title is probably the biggest news in neural networks since the invention of the backpropagation algorithm.

0 kommentar(er)

0 kommentar(er)